Get Insights with Weights and Biases Dashboards and Azure AI RAG with Fast API

Intro

Building AI models is only half the battle. How do you know if users actually like the outcomes, or if your chatbot is truly helpful? Are you able to track your AI Models ?

AI systems thrive on continuous improvement; however, without structured feedback, developers are often left guessing. Moreover, raw user input—whether through ratings, comments, or engagement metrics—can be scattered and difficult to analyze effectively. Consequently, without a streamlined way to interpret what works and what doesn’t, refining an AI model becomes more art than science. Ultimately, incorporating a structured feedback loop can transform AI development, making models more responsive, adaptable, and valuable in real-world applications.

This blog post demonstrates a simple yet powerful solution to track your AI Models: integrating a “thumbs up/down” feedback mechanism into an Azure AI WebApp and using Weights & Biases (W&B) reports to extract meaningful insights. By bridging direct user reactions with detailed analytics, AI developers can iterate faster, improve predictions, and boost user satisfaction.

Follow along as we walk through the practical steps of building this feedback system and uncover how structured evaluation can transform your model’s performance.

Why User Feedback Matters for AI

AI models are constantly evolving as they interact with real-world data, meaning their development is never truly complete. However, pinpointing performance issues early can be difficult without a structured evaluation system. To ensure continuous optimization, it is essential to implement a reliable tracking mechanism that monitors model behavior, identifies areas for improvement, and enhances overall effectiveness.

- Model Drift Detection: AI models may degrade over time as data distributions shift. Continuous feedback helps flag performance declines before they impact users.

- Data Labeling & Refinement: Not all training data is created equal. User responses highlight critical areas where re-labeling or fine-tuning can improve accuracy.

- User Experience (UX) Improvement: Beyond predictions, AI applications must be intuitive. Feedback reveals user frustrations and areas needing refinement.

- Iterative Development: AI thrives on continuous learning. A well-integrated feedback loop enables rapid iteration and deployment of better models.

In a modern MLOps lifecycle, user feedback isn’t just nice to have—it’s essential. By embedding evaluation metrics into development workflows, teams create AI systems that remain adaptive, efficient, and genuinely helpful.

Tech Stack & Tools to track your AI Models

To build an efficient AI feedback system, we rely on a combination of powerful tools that streamline experimentation, orchestration, and deployment.

- Azure AI Services – Leveraging Azure OpenAI for GPT-based interactions and Azure AI Search to enhance retrieval-based responses ensures robust AI performance.

- Weights & Biases (W&B) – Essential for experiment tracking, model monitoring, and fine-tuning dashboards that help optimize AI behavior based on user feedback.

- FastAPI / Flask + React – Forms the backbone of our Web UI, enabling seamless interaction between users and the AI-powered system.

- Azure Blob Storage – Provides efficient storage for documents or embeddings, supporting scalable data handling and retrieval.

- Fine-Tuning Options – Depending on project needs, models can be refined either via OpenAI (using W&B sweeps) or Hugging Face models hosted on Azure ML, enhancing performance across specific tasks.

Small Scale Demo

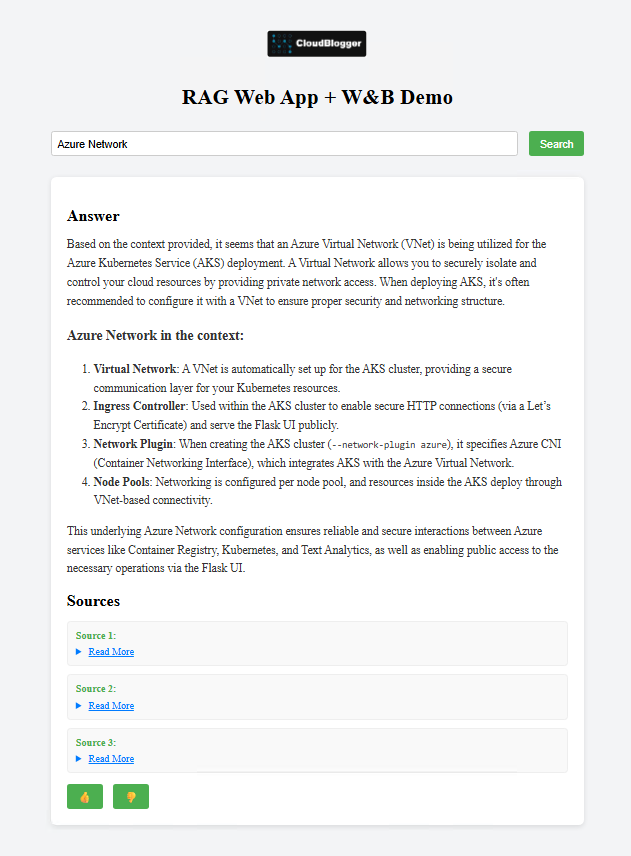

The Demo RAG Application provides a simple and efficient UI for users to interact with our model and provide feedback for the quality-relevance of the response. Additional placeholders can be used to direct the user to the actual, referenced source of information.

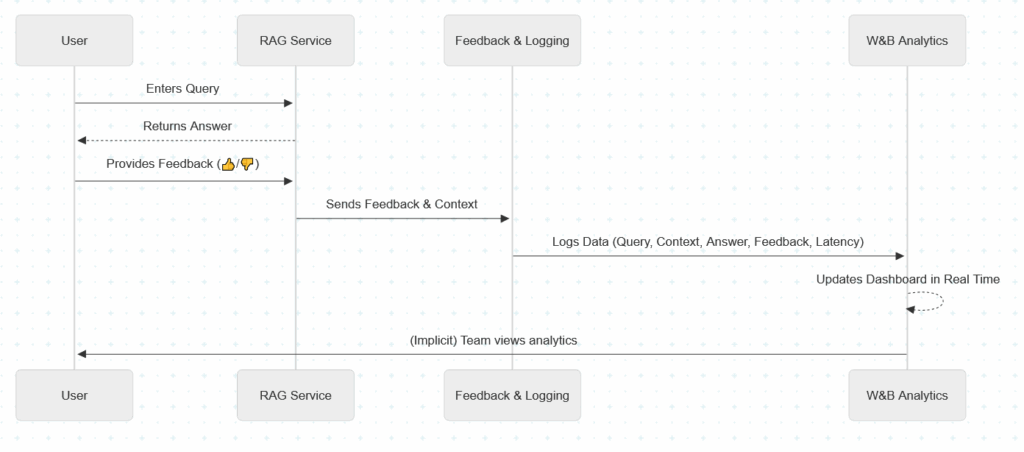

The following simplified sequence flow reveals the actual interactions of our tools and services:

Frontend RAG with Vue

Our RAG is a Web UI that allows users to ask our Model about anything. However our Model is grounded in Azure AI Search Vector Index content, taken from a blob. So if the user’s question context is found, the model returns an answer. The content is a series of blog posts from Cloudblogger and we have pre-processed it into JSON format, generated embeddings an uploaded content in Azure AI Search.

The page is very simple to recreate and the code is going to be available in GitHub soon. Let’s see how we are defining those thumbs up – thumbs down actions, and how they are tied to get the feedback from users so you can track your AI Models effectively.

<script setup>

import { ref } from 'vue';

const query = ref('');

const loading = ref(false);

const result = ref(null);

const feedback = ref(null);

const handleSearch = async () => {

loading.value = true;

result.value = null;

feedback.value = null;

try {

const response = await fetch('/api/query', {

method: 'POST',

headers: { 'Content-Type': 'application/json' },

body: JSON.stringify({ query: query.value }),

});

result.value = await response.json();

} catch (error) {

console.error('Error fetching result:', error);

}

loading.value = false;

};

const sendFeedback = async (isPositive) => {

feedback.value = isPositive ? 'positive' : 'negative';

await fetch('/api/feedback', {

method: 'POST',

headers: { 'Content-Type': 'application/json' },

body: JSON.stringify({

query: query.value,

feedback: isPositive ? '👍' : '👎'

}),

});

};

</script>Backend Fast API with Python

It is quite obvious we need 2 endpoints in our Backend. The /api/query is clear enough, we call the LLM to interact with. The /api/feedback is where you start to track your AI Models. Let’s have a look:

from fastapi import FastAPI, Request

from fastapi.middleware.cors import CORSMiddleware

from pydantic import BaseModel

from typing import List

from azure.search.documents import SearchClient

from azure.core.credentials import AzureKeyCredential

from openai import AzureOpenAI

import wandb

import os

import time

from dotenv import load_dotenv

# Load environment variables from .env file

load_dotenv()

app = FastAPI()

# CORS for frontend interaction

app.add_middleware(

CORSMiddleware,

allow_origins=["*"],

allow_credentials=True,

allow_methods=["*"],

allow_headers=["*"],

)

# Initialize W&B

wandb.init(project="rag-demo", name="inference-tracking", reinit=True)

# Azure Search Configuration

SEARCH_ENDPOINT = os.getenv("AZURE_SEARCH_ENDPOINT")

SEARCH_KEY = os.getenv("AZURE_SEARCH_KEY")

SEARCH_INDEX = os.getenv("AZURE_SEARCH_INDEX")

search_client = SearchClient(

endpoint=SEARCH_ENDPOINT,

index_name=SEARCH_INDEX,

credential=AzureKeyCredential(SEARCH_KEY)

)

# Azure OpenAI Configuration

DEPLOYMENT_NAME = os.getenv("AZURE_OPENAI_DEPLOYMENT")

openai_client = AzureOpenAI(

api_key=os.getenv("AZURE_OPENAI_KEY"),

api_version="2023-05-15",

azure_endpoint=os.getenv("AZURE_OPENAI_ENDPOINT")

)

class QueryRequest(BaseModel):

query: str

class FeedbackRequest(BaseModel):

query: str

feedback: str

def retrieve_documents(query: str) -> List[dict]:

results = search_client.search(

search_text=query,

top=3,

query_type="semantic",

semantic_configuration_name="default"

)

return [doc for doc in results]

def generate_answer(query: str, context_docs: List[str]) -> str:

context_text = "\n".join(context_docs)

prompt = f"""

You are an assistant. Use the following context to answer the question.

Context:

{context_text}

Question:

{query}

"""

response = openai_client.chat.completions.create(

model=DEPLOYMENT_NAME,

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": prompt}

]

)

return response.choices[0].message.content.strip()

@app.post("/api/query")

async def query_handler(req: QueryRequest):

start = time.time()

docs = retrieve_documents(req.query)

context = [doc["content"] for doc in docs]

answer = generate_answer(req.query, context)

wandb.log({

"query": req.query,

"retrieved_doc_ids": [doc["id"] for doc in docs],

"retrieved_text": context,

"generated_answer": answer,

"latency_ms": round((time.time() - start) * 1000, 2)

})

return {

"answer": answer,

"sources": context

}

@app.post("/api/feedback")

async def feedback_handler(req: FeedbackRequest):

feedback_type = "positive" if req.feedback == "👍" else "negative"

wandb.log({

"feedback_query": req.query,

"user_feedback": req.feedback,

"feedback_type": feedback_type

})

return {"status": "feedback recorded"}

The final lines are what we are leveraging within the Weight and Biases packages. We are sending the wandb.log(), in our W&B Subscription.

The package itself brings quite some metrics in our view:

The real metrics however are created by us, based on how we collect and what signals we register in the backend.

Takeaways

As we progress into integrating more and more Web and Mobile Applications with AI, it is mandatory to implement our safeguards, including collecting feedback, metrics and performance insights. In our example the feedback allows us to pinpoint potential issues and misconfigurations all the way to more important aspects of a RAG Deployment like training biases, loose system prompts and unreliable models, with additional feedback collection mechanisms, to better track your AI Models.

Start with a free trial in Weights and Biases portal, and follow the documentation or my GitHub repo on how to setup W&B in your Application. Spend some time to create those Charts and reports and you will gain important know-how on the brand new and exciting world of AI Development!

Links – References

- Advanced Sample RAG: CloudBlogger

- RAG In Azure AI Search

- Chat with your Data: Azure OpenAI

- Integrate Azure OpenAI with Weights & Biases